Qemu-devel Re: Small Fix For Qemu Apic For Mac

. Written in, and some other platforms Website QEMU (short for Quick Emulator ) is a that performs. QEMU is a: it emulates the machine's through dynamic and provides a set of different hardware and device models for the machine, enabling it to run a variety of. It also can be used with to run virtual machines at near-native speed (by taking advantage of hardware extensions such as ). QEMU can also do emulation for user-level processes, allowing applications compiled for one architecture to run on another. Contents.

Licensing QEMU was written by and is, mainly licensed under the (GPL for short). Various parts are released under the, (LGPL) or other GPL-compatible licenses. Operating modes QEMU has multiple operating modes: User-mode emulation In this mode QEMU runs single or / programs that were compiled for a different. System calls are for and for 32/64 bit mismatches. Fast cross-compilation and cross-debugging are the main targets for user-mode emulation.

System emulation In this mode QEMU emulates a full computer system, including. It can be used to provide virtual hosting of several virtual computers on a single computer. QEMU can boot many guest, including, and; it supports emulating several instruction sets, including, 32-bit,. KVM Hosting Here QEMU deals with the setting up and migration of KVM images. It is still involved in the emulation of hardware, but the execution of the guest is done by KVM as requested by QEMU. Xen Hosting QEMU is involved only in the emulation of hardware; the execution of the guest is done within Xen and is totally hidden from QEMU.

Features QEMU can save and restore the state of the virtual machine with all programs running. Guest operating-systems do not need patching in order to run inside QEMU. QEMU supports the emulation of various architectures, including:. (x86) PCs. PCs.

Release 6 and earlier variants. Sun's sun4m. Sun's sun4u. development boards (Integrator/CP and Versatile/PB). SHIX board. ( and ). The virtual machine can interface with many types of physical host hardware, including the user's hard disks, CD-ROM drives, network cards, audio interfaces, and USB devices.

USB devices can be completely emulated, or the host's USB devices can be used, although this requires administrator privileges and does not work with all devices. Virtual disk images can be stored in a special format that only take up disk space that the guest OS actually uses. This way, an emulated 120 GB disk may occupy only a few hundred megabytes on the host. The QCOW2 format also allows the creation of overlay images that record the difference from another (unmodified) base image file. This provides the possibility for reverting the emulated disk's contents to an earlier state. For example, a base image could hold a fresh install of an operating system that is known to work, and the overlay images are used.

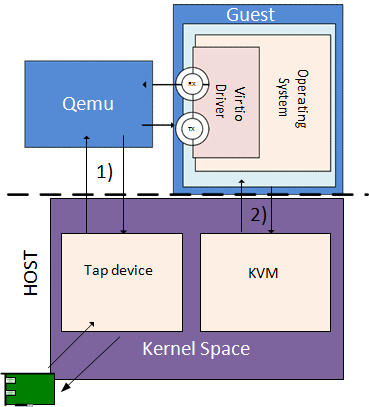

Should the guest system become unusable (through virus attack, accidental system destruction, etc.), the user can delete the overlay and reconstruct an earlier emulated disk-image version. QEMU can emulate network cards (of different models) which share the host system's connectivity by doing network address translation, effectively allowing the guest to use the same network as the host. The virtual network cards can also connect to network cards of other instances of QEMU or to local interfaces. Network connectivity can also be achieved by bridging a TUN/TAP interface used by QEMU with a non-virtual Ethernet interface on the host OS using the host OS's bridging features. QEMU integrates several services to allow the host and guest systems to communicate; for example, an integrated server and network-port redirection (to allow incoming connections to the virtual machine).

It can also boot Linux kernels without a bootloader. QEMU does not depend on the presence of graphical output methods on the host system. Instead, it can allow one to access the screen of the guest OS via an integrated server. It can also use an emulated serial line, without any screen, with applicable operating systems.

Simulating multiple CPUs running is possible. QEMU does not require administrative rights to run, unless additional kernel modules for improving speed are used (like ), or when some modes of its network connectivity model are utilized. Tiny Code Generator The Tiny Code Generator (TCG) aims to remove the shortcoming of relying on a particular version of or any compiler, instead incorporating the compiler (code generator) into other tasks performed by QEMU at run time.

The whole translation task thus consists of two parts: blocks of target code ( TBs) being rewritten in TCG ops - a kind of machine-independent intermediate notation, and subsequently this notation being compiled for the host's architecture by TCG. Optional optimisation passes are performed between them.

TCG requires dedicated code written to support every architecture it runs on. It also requires that the target instruction translation be rewritten to take advantage of TCG ops, instead of the previously used dyngen ops. Starting with QEMU Version 0.10.0, TCG ships with the QEMU stable release. Accelerator KQEMU was a, also written by, which notably sped up emulation of x86 or x86-64 guests on platforms with the same CPU architecture. This worked by running code (and optionally some kernel code) directly on the host computer's CPU, and by using processor and peripheral emulation only for and code. KQEMU could execute code from many guest OSes even if the host CPU did not support. KQEMU was initially a product available free of charge, but starting from version 1.3.0pre10, it was under the.

QEMU versions starting with 0.12.0 (as of August 2009 ) support large memory which makes them incompatible with KQEMU. Newer releases of QEMU have completely removed support for KQEMU. QVM86 was a licensed drop-in replacement for the then closed-source KQEMU. The developers of QVM86 ceased development in January, 2007. ( KVM) has mostly taken over as the Linux-based hardware-assisted virtualization solution for use with QEMU in the wake of the lack of support for KQEMU and QVM86.

Intel's Hardware Accelerated Execution Manager ( HAXM) is an open-source alternative to KVM for x86-based hardware-assisted virtualization on Windows and macOS. As of 2013 Intel mostly solicits its use with QEMU for Android development. Starting with version 2.9.0, the official QEMU includes support for HAXM.

Supported disk image formats QEMU supports the following formats:. (.dmg) – Read-only. – Read-only.

– Read-only. disk image (.hdd,.hds) – Read-only. (.qcow2,.qed,.qcow,.cow). (.vdi). (.vhd). Virtual.

(.vmdk). (.img) that contain sector-by-sector contents of a disk. (.iso) that contain sector-by-sector contents of an optical disk (e.g.

Booting live OSes ) Hardware-assisted emulation The -compatible -3 processor adds 200 new instructions to help QEMU translate x86 instructions; those new instructions lower the overhead of executing x86/-style instructions in the MIPS pipeline. With additional improvements in QEMU by the, Loongson-3 achieves an average of 70% the performance of executing native binaries while running x86 binaries from nine benchmarks. Parallel emulation Virtualization solutions that use QEMU are able to execute multiple virtual CPUs in parallel. For user-mode emulation QEMU maps emulated threads to host threads. For full system emulation QEMU is capable of running a host thread for each emulated virtual CPU (vCPU). This is dependent on the guest having been updated to support parallel system emulation, currently ARM, Alpha, HP-PA, PowerPC, RISC-V and s390x.

Otherwise a single thread is used to emulate all virtual CPUS (vCPUS) which executes each vCPU in a round-robin manner. Integration VirtualBox , released in January 2007, uses some of QEMU's virtual hardware devices, and has a built-in based on QEMU.

As with KQEMU, VirtualBox runs nearly all guest code natively on the host via the VMM (Virtual Machine Manager) and uses the recompiler only as a fallback mechanism - for example, when guest code executes in. In addition, VirtualBox does a lot of code analysis and patching using a built-in disassembler in order to minimize recompilation. VirtualBox is free and open-source (available under ), except for certain features.

Xen-HVM , a virtual machine monitor, can run in HVM (hardware virtual machine) mode, using or hardware extensions and and virtualization extension. This means that instead of paravirtualized devices, a real set of virtual hardware is exposed to the domU to use real device drivers to talk to.

QEMU includes several components: CPU emulators, emulated devices, generic devices, machine descriptions, user interface, and a debugger. The emulated devices and generic devices in QEMU make up its device models for I/O virtualization. They comprise a PIIX3 IDE (with some rudimentary PIIX4 capabilities), Cirrus Logic or plain VGA emulated video, RTL8139 or E1000 network emulation, and ACPI support. APIC support is provided by Xen. Xen-HVM has device emulation based on the QEMU project to provide I/O virtualization to the VMs. Hardware is emulated via a QEMU 'device model' daemon running as a backend in dom0. Unlike other QEMU running modes (dynamic translation or KVM), virtual CPUs are completely managed to the hypervisor, which takes care of stopping them while QEMU is emulating memory-mapped I/O accesses.

KVM (Kernel-based Virtual Machine) is a FreeBSD and Linux kernel module that allows a program access to the features of various processors, with which QEMU is able to offer virtualization for x86, PowerPC, and S/390 guests. When the target architecture is the same as the host architecture, QEMU can make use of KVM particular features, such as acceleration.

Win4Lin Pro Desktop In early 2005, introduced Win4Lin Pro Desktop, based on a 'tuned' version of QEMU and KQEMU and it hosts NT-versions of Windows. In June 2006, Win4Lin released Win4Lin Virtual Desktop Server based on the same code base. Win4Lin Virtual Desktop Server serves Microsoft Windows sessions to thin clients from a Linux server. In September 2006, Win4Lin announced a change of the company name to with the release of Win4BSD Pro Desktop, a port of the product to FreeBSD and PC-BSD. Solaris support followed in May 2007 with the release of Win4Solaris Pro Desktop and Win4Solaris Virtual Desktop Server.

SerialICE SerialICE is a QEMU-based firmware debugging tool running system firmware inside of QEMU while accessing real hardware through a serial connection to a host system. This can be used as a cheap replacement for hardware. WinUAE Amiga emulator introduced in version 3.0.0 the support for using QEMU PPC core. QEMU booted into the ARM port of QEMU emulates the (and down to ARMv5TEJ) with NEON extension.

It emulates full systems like Integrator/CP board, Versatile baseboard, RealView Emulation baseboard, XScale-based PDAs, Palm Tungsten E PDA, and Internet tablets etc. QEMU also powers the Android emulator which is part of the (most current Android implementations are ARM based). Starting from version 2.0.0 of their Bada SDK, Samsung has chosen QEMU to help development on emulated 'Wave' devices. In 1.5.0 and 1.6.0 Samsung 4210 (dual-core Cortex a9) and Versatile Express are emulated. In 1.6.0, the 32-bit instructions of the ARMv8 (AARCH64) architecture are emulated, but 64-bit instructions are unsupported. The Xilinx Cortex A9-based Zynq SoC is modelled, with the following elements:. Zynq-7000 ARM Cortex-A9 CPU.

Zynq-7000 ARM Cortex-A9 MPCore. Triple Timer Counter.

DDR Memory Controller. DMA Controller (PL330). Static Memory Controller (NAND/NOR Flash). SD/SDIO Peripheral Controller (SDHCI). Zynq Gigabit Ethernet Controller. USB Controller (EHCI - Host support only). Zynq UART Controller.

SPI and QSPI Controllers. I2C Controller SPARC QEMU has support for both 32 and 64-bit architectures. When the firmware in the (sun4m-Architecture) became version 0.8.1 Proll, a replacement used in version 0.8.2, was replaced with.

Retrieved 15 January 2018. February 6, 2007. Retrieved 2009-01-03. Liguori, Anthony (10 August 2009). Retrieved 2010-03-11.

QEMU developers. Retrieved 2017-01-14. HAXM is now open source. Retrieved 2014-05-12. The Intel Hardware Accelerated Execution Manager (Intel® HAXM) is a hardware-assisted virtualization engine (hypervisor) that uses Intel Virtualization Technology (Intel® VT) to speed up Android app emulation on a host machine. Retrieved 2009-04-16.

Retrieved 2015-02-02. December 22, 2007, at the. February 10, 2008, at the.

December 23, 2007, at the. English Amiga Board. Retrieved 2016-03-25.

'i82551, i82557b, i82559er, ne2kpci, ne2kisa, pcnet, rtl8139, e1000, smc91c111, lance and mcffec'. Networking on QEMU: Setting Up The E1000 & Novell NE2000 ISA Evaluation. Retrieved 2011-08-08. 090427 people.redhat.com. 090506 homepage.ntlworld.com.

090506 nongnu.org. External links Wikimedia Commons has media related to. Wikibooks has more on the topic of:. an IBM developerWorks article by M. Tim Jones. (in German, or computer translated to English).

– Linux is a Unix-like computer operating system assembled under the model of free and open-source software development and distribution. The defining component of Linux is the Linux kernel, an operating system kernel first released on September 17,1991 by Linus Torvalds, the Free Software Foundation uses the name GNU/Linux to describe the operating system, which has led to some controversy. Linux was originally developed for computers based on the Intel x86 architecture.

Because of the dominance of Android on smartphones, Linux has the largest installed base of all operating systems. Linux is also the operating system on servers and other big iron systems such as mainframe computers. It is used by around 2. 3% of desktop computers, the Chromebook, which runs on Chrome OS, dominates the US K–12 education market and represents nearly 20% of the sub-$300 notebook sales in the US. Linux also runs on embedded systems – devices whose operating system is built into the firmware and is highly tailored to the system.

This includes TiVo and similar DVR devices, network routers, facility automation controls, televisions, many smartphones and tablet computers run Android and other Linux derivatives. The development of Linux is one of the most prominent examples of free, the underlying source code may be used, modified and distributed—commercially or non-commercially—by anyone under the terms of its respective licenses, such as the GNU General Public License. Typically, Linux is packaged in a known as a Linux distribution for both desktop and server use. Distributions intended to run on servers may omit all graphical environments from the standard install, because Linux is freely redistributable, anyone may create a distribution for any intended use.

The Unix operating system was conceived and implemented in 1969 at AT&Ts Bell Laboratories in the United States by Ken Thompson, Dennis Ritchie, Douglas McIlroy, first released in 1971, Unix was written entirely in assembly language, as was common practice at the time. Later, in a key pioneering approach in 1973, it was rewritten in the C programming language by Dennis Ritchie, the availability of a high-level language implementation of Unix made its porting to different computer platforms easier. Due to an earlier antitrust case forbidding it from entering the computer business, as a result, Unix grew quickly and became widely adopted by academic institutions and businesses. In 1984, AT&T divested itself of Bell Labs, freed of the legal obligation requiring free licensing, the GNU Project, started in 1983 by Richard Stallman, has the goal of creating a complete Unix-compatible software system composed entirely of free software. Later, in 1985, Stallman started the Free Software Foundation, by the early 1990s, many of the programs required in an operating system were completed, although low-level elements such as device drivers, daemons, and the kernel were stalled and incomplete.

Linus Torvalds has stated that if the GNU kernel had been available at the time, although not released until 1992 due to legal complications, development of 386BSD, from which NetBSD, OpenBSD and FreeBSD descended, predated that of Linux. Torvalds has also stated that if 386BSD had been available at the time, although the complete source code of MINIX was freely available, the licensing terms prevented it from being free software until the licensing changed in April 2000 2. – Among these is Apples macOS, which is the Unix version with the largest installed base as of 2014.

Many Unix-like operating systems have arisen over the years, of which Linux is the most popular, Unix was originally meant to be a convenient platform for programmers developing software to be run on it and on other systems, rather than for non-programmer users. The system grew larger as the system started spreading in academic circles, as users added their own tools to the system.

Unix was designed to be portable, multi-tasking and multi-user in a time-sharing configuration and these concepts are collectively known as the Unix philosophy. By the early 1980s users began seeing Unix as a universal operating system. Under Unix, the system consists of many utilities along with the master control program. To mediate such access, the kernel has special rights, reflected in the division between user space and kernel space, the microkernel concept was introduced in an effort to reverse the trend towards larger kernels and return to a system in which most tasks were completed by smaller utilities. In an era when a standard computer consisted of a disk for storage and a data terminal for input and output. However, modern systems include networking and other new devices, as graphical user interfaces developed, the file model proved inadequate to the task of handling asynchronous events such as those generated by a mouse.

In the 1980s, non-blocking I/O and the set of inter-process communication mechanisms were augmented with Unix domain sockets, shared memory, message queues, and semaphores. In microkernel implementations, functions such as network protocols could be moved out of the kernel, Multics introduced many innovations, but had many problems. Frustrated by the size and complexity of Multics but not by the aims and their last researchers to leave Multics, Ken Thompson, Dennis Ritchie, M. McIlroy, and J. Ossanna, decided to redo the work on a much smaller scale. The name Unics, a pun on Multics, was suggested for the project in 1970.

Salus credits Peter Neumann with the pun, while Brian Kernighan claims the coining for himself, in 1972, Unix was rewritten in the C programming language. Bell Labs produced several versions of Unix that are referred to as Research Unix. In 1975, the first source license for UNIX was sold to faculty at the University of Illinois Department of Computer Science, UIUC graduate student Greg Chesson was instrumental in negotiating the terms of this license.

During the late 1970s and early 1980s, the influence of Unix in academic circles led to adoption of Unix by commercial startups, including Sequent, HP-UX, Solaris, AIX. In the late 1980s, AT&T Unix System Laboratories and Sun Microsystems developed System V Release 4, in the 1990s, Unix-like systems grew in popularity as Linux and BSD distributions were developed through collaboration by a worldwide network of programmers 3. – The computer industry has used the term central processing unit at least since the early 1960s. The form, design and implementation of CPUs have changed over the course of their history, most modern CPUs are microprocessors, meaning they are contained on a single integrated circuit chip. An IC that contains a CPU may also contain memory, peripheral interfaces, some computers employ a multi-core processor, which is a single chip containing two or more CPUs called cores, in that context, one can speak of such single chips as sockets. Array processors or vector processors have multiple processors that operate in parallel, there also exists the concept of virtual CPUs which are an abstraction of dynamical aggregated computational resources.

Early computers such as the ENIAC had to be rewired to perform different tasks. Since the term CPU is generally defined as a device for software execution, the idea of a stored-program computer was already present in the design of J. Presper Eckert and John William Mauchlys ENIAC, but was initially omitted so that it could be finished sooner.

On June 30,1945, before ENIAC was made, mathematician John von Neumann distributed the paper entitled First Draft of a Report on the EDVAC and it was the outline of a stored-program computer that would eventually be completed in August 1949. EDVAC was designed to perform a number of instructions of various types. Significantly, the programs written for EDVAC were to be stored in high-speed computer memory rather than specified by the wiring of the computer. This overcame a severe limitation of ENIAC, which was the considerable time, with von Neumanns design, the program that EDVAC ran could be changed simply by changing the contents of the memory.

Early CPUs were custom designs used as part of a larger, however, this method of designing custom CPUs for a particular application has largely given way to the development of multi-purpose processors produced in large quantities. This standardization began in the era of discrete transistor mainframes and minicomputers and has accelerated with the popularization of the integrated circuit. The IC has allowed increasingly complex CPUs to be designed and manufactured to tolerances on the order of nanometers, both the miniaturization and standardization of CPUs have increased the presence of digital devices in modern life far beyond the limited application of dedicated computing machines. Modern microprocessors appear in electronic devices ranging from automobiles to cellphones, the so-called Harvard architecture of the Harvard Mark I, which was completed before EDVAC, also utilized a stored-program design using punched paper tape rather than electronic memory. Relays and vacuum tubes were used as switching elements, a useful computer requires thousands or tens of thousands of switching devices.

The overall speed of a system is dependent on the speed of the switches, tube computers like EDVAC tended to average eight hours between failures, whereas relay computers like the Harvard Mark I failed very rarely. In the end, tube-based CPUs became dominant because the significant speed advantages afforded generally outweighed the reliability problems, most of these early synchronous CPUs ran at low clock rates compared to modern microelectronic designs. Clock signal frequencies ranging from 100 kHz to 4 MHz were very common at this time, the design complexity of CPUs increased as various technologies facilitated building smaller and more reliable electronic devices 4. – X86 is a family of backward-compatible instruction set architectures based on the Intel 8086 CPU and its Intel 8088 variant. The term x86 came into being because the names of several successors to Intels 8086 processor end in 86, many additions and extensions have been added to the x86 instruction set over the years, almost consistently with full backward compatibility. The architecture has been implemented in processors from Intel, Cyrix, AMD, VIA and many companies, there are also open implementations.

In the 1980s and early 1990s, when the 8088 and 80286 were still in common use, today, however, x86 usually implies a binary compatibility also with the 32-bit instruction set of the 80386. An 8086 system, including such as 8087 and 8089.

There were also terms iRMX, iSBC, and iSBX – all together under the heading Microsystem 80, however, this naming scheme was quite temporary, lasting for a few years during the early 1980s. Today, x86 is ubiquitous in both stationary and portable computers, and is also used in midrange computers, workstations, servers. A large amount of software, including operating systems such as DOS, Windows, Linux, BSD, Solaris and macOS, functions with x86-based hardware. There have been attempts, including by Intel itself, to end the market dominance of the inelegant x86 architecture designed directly from the first simple 8-bit microprocessors. Examples of this are the iAPX432, the Intel 960, Intel 860, however, the continuous refinement of x86 microarchitectures, circuitry and semiconductor manufacturing would make it hard to replace x86 in many segments.

The table below lists processor models and model series implementing variations of the x86 instruction set, each line item is characterized by significantly improved or commercially successful processor microarchitecture designs. Such x86 implementations are seldom simple copies but often employ different internal microarchitectures as well as different solutions at the electronic, quite naturally, early compatible microprocessors were 16-bit, while 32-bit designs were developed much later. For the personal computer market, real quantities started to appear around 1990 with i386 and i486 compatible processors, other companies, which designed or manufactured x86 or x87 processors, include ITT Corporation, National Semiconductor, ULSI System Technology, and Weitek. Some early versions of these microprocessors had heat dissipation problems, AMD later managed to establish itself as a serious contender with the K6 set of processors, which gave way to the very successful Athlon and Opteron. There were also other contenders, such as Centaur Technology, Rise Technology, VIA Technologies energy efficient C3 and C7 processors, which were designed by the Centaur company, have been sold for many years. Centaurs newest design, the VIA Nano, is their first processor with superscalar and it was, perhaps interestingly, introduced at about the same time as Intels first in-order processor since the P5 Pentium, the Intel Atom.

The instruction set architecture has twice been extended to a word size. In 1999-2003, AMD extended this 32-bit architecture to 64 bits and referred to it as x86-64 in early documents, Intel soon adopted AMDs architectural extensions under the name IA-32e, later using the name EM64T and finally using Intel 64 5. – ARM, originally Acorn RISC Machine, later Advanced RISC Machine, is a family of reduced instruction set computing architectures for computer processors, configured for various environments. It also designs cores that implement this instruction set and licenses these designs to a number of companies that incorporate those core designs into their own products, a RISC-based computer design approach means processors require fewer transistors than typical complex instruction set computing x86 processors in most personal computers.

This approach reduces costs, heat and power use and these characteristics are desirable for light, portable, battery-powered devices—including smartphones, laptops and tablet computers, and other embedded systems. For supercomputers, which large amounts of electricity, ARM could also be a power-efficient solution. ARM Holdings periodically releases updates to architectures and core designs, some older cores can also provide hardware execution of Java bytecodes.

The ARMv8-A architecture, announced in October 2011, adds support for a 64-bit address space, with over 100 billion ARM processors produced as of 2017, ARM is the most widely used instruction set architecture in terms of quantity produced. Currently, the widely used Cortex cores, older classic cores, the British computer manufacturer Acorn Computers first developed the Acorn RISC Machine architecture in the 1980s to use in its personal computers. Its first ARM-based products were coprocessor modules for the BBC Micro series of computers, according to Sophie Wilson, all the tested processors at that time performed about the same, with about a 4 Mbit/second bandwidth. After testing all available processors and finding them lacking, Acorn decided it needed a new architecture, inspired by white papers on the Berkeley RISC project, Acorn considered designing its own processor. Wilson developed the set, writing a simulation of the processor in BBC BASIC that ran on a BBC Micro with a 6502 second processor. This convinced Acorn engineers they were on the right track, Wilson approached Acorns CEO, Hermann Hauser, and requested more resources.

There is no microphone signal in use. Bluetooth sco audio drivers for macbook pro. This allows the computer to send high quality stereo audio to the headphones.

Hauser gave his approval and assembled a team to implement Wilsons model in hardware. The official Acorn RISC Machine project started in October 1983 and they chose VLSI Technology as the silicon partner, as they were a source of ROMs and custom chips for Acorn. Wilson and Furber led the design and they implemented it with a similar efficiency ethos as the 6502.

A key design goal was achieving low-latency input/output handling like the 6502, the 6502s memory access architecture had let developers produce fast machines without costly direct memory access hardware. The first samples of ARM silicon worked properly when first received and tested on 26 April 1985, Wilson subsequently rewrote BBC BASIC in ARM assembly language. The in-depth knowledge gained from designing the instruction set enabled the code to be very dense, the original aim of a principally ARM-based computer was achieved in 1987 with the release of the Acorn Archimedes. In 1992, Acorn once more won the Queens Award for Technology for the ARM, the ARM2 featured a 32-bit data bus, 26-bit address space and 27 32-bit registers 6. – ReactOS is a free and open-source operating system for x86/x64 personal computers intended to be binary-compatible with computer programs and device drivers made for Windows Server 2003.

Development began in 1996, as a Windows 95 clone project, ReactOS has been noted as a potential open-source drop-in replacement for Windows and for its information on undocumented Windows APIs. As stated on the website, The main goal of the ReactOS project is to provide an operating system which is binary compatible with Windows. Such that people accustomed to the user interface of Windows would find using ReactOS straightforward. The ultimate goal of ReactOS is to allow you to remove Windows, ReactOS is primarily written in C, with some elements, such as ReactOS File Explorer, written in C. The project partially implements Windows API functionality and has been ported to the AMD64 processor architecture, around 1996, a group of free and open-source software developers started a project called FreeWin95 to implement a clone of Windows 95. The project stalled in discussions of the design of the system, while FreeWin95 had started out with high expectations, there still had not been any builds released to the public by the end of 1997. As a result, the members, led by coordinator Jason Filby.

Qemu-devel Re Small Fix For Qemu Apic For Mac

The revived project sought to duplicate the functionality of Windows NT, in creating the new project, a new name, ReactOS, was chosen. The project began development in February 1998 by creating the basis for a new NT kernel, the name ReactOS was coined during an IRC chat. While the term OS stood for operating system, the term referred to the groups dissatisfaction with –. In 2004, a copyright / license violation of ReactOS GPLed code was found when someone distributed a ReactOS fork under the name Ekush OS, in order to avoid copyright prosecution, ReactOS must be expressively completely distinct and non-derivative from Windows, a goal which needs very careful work.

A claim was made on 17 January 2006, by now former developer Hartmut Birr on the ReactOS developers mailing list that ReactOS contained code derived from disassembling Microsoft Windows, the code that Birr disputed involved the function BadStack in syscall. As well as other unspecified items. Comparing this function to disassembled binaries from Windows XP, Birr argued that the BadStack function was simply copy-pasted from Windows XP, on 27 January 2006, the developers responsible for maintaining the ReactOS code repository disabled access after a meeting was held to discuss the allegations. When approached by NewsForge, Microsoft declined to comment about the incident, contributions from several active ReactOS developers have been accepted post-audit, and low level cooperation for bug fixes still occurs. In a statement on its website, ReactOS cited differing legal definitions of what constitutes clean-room reverse engineering as a cause for the conflict, consequently, ReactOS clarified that its Intellectual Property Policy Statement requirements on clean room reverse engineering conform to US law. Contributors to its development were not affected by events.

In September 2007, with the audit nearing completion, the status was removed from the ReactOS homepage 7. – The right to study and modify software entails availability of the software source code to its users. This right is conditional on the person actually having a copy of the software. Richard Stallman used the existing term free software when he launched the GNU Project—a collaborative effort to create a freedom-respecting operating system—and the Free Software Foundation. The FSFs Free Software Definition states that users of software are free because they do not need to ask for permission to use the software. Free software thus differs from proprietary software, such as Microsoft Office, Google Docs, Sheets, and Slides or iWork from Apple, which users cannot study, change, freeware, which is a category of freedom-restricting proprietary software that does not require payment for use.

For computer programs that are covered by law, software freedom is achieved with a software license. Software that is not covered by law, such as software in the public domain, is free if the source code is in the public domain. Proprietary software, including freeware, use restrictive software licences or EULAs, Users are thus prevented from changing the software, and this results in the user relying on the publisher to provide updates, help, and support. This situation is called vendor lock-in, Users often may not reverse engineer, modify, or redistribute proprietary software. Other legal and technical aspects, such as patents and digital rights management may restrict users in exercising their rights. Free software may be developed collaboratively by volunteer computer programmers or by corporations, as part of a commercial, from the 1950s up until the early 1970s, it was normal for computer users to have the software freedoms associated with free software, which was typically public domain software. Software was commonly shared by individuals who used computers and by manufacturers who welcomed the fact that people were making software that made their hardware useful.

Organizations of users and suppliers, for example, SHARE, were formed to exchange of software. As software was written in an interpreted language such as BASIC. Software was also shared and distributed as printed source code in computer magazines and books, in United States vs.

IBM, filed January 17,1969, the government charged that bundled software was anti-competitive. While some software might always be free, there would henceforth be an amount of software produced primarily for sale. In the 1970s and early 1980s, the industry began using technical measures to prevent computer users from being able to study or adapt the software as they saw fit. In 1980, copyright law was extended to computer programs, Software development for the GNU operating system began in January 1984, and the Free Software Foundation was founded in October 1985 8. – Berkeley Software Distribution is a Unix operating system derivative developed and distributed by the Computer Systems Research Group of the University of California, Berkeley, from 1977 to 1995. Today the term BSD is often used non-specifically to refer to any of the BSD descendants which together form a branch of the family of Unix-like operating systems, operating systems derived from the original BSD code remain actively developed and widely used. Historically, BSD has been considered a branch of Unix, Berkeley Unix, because it shared the initial codebase, in the 1980s, BSD was widely adopted by vendors of workstation-class systems in the form of proprietary Unix variants such as DEC ULTRIX and Sun Microsystems SunOS.

This can be attributed to the ease with which it could be licensed, FreeBSD, OpenBSD, NetBSD, Darwin, and PC-BSD. The earliest distributions of Unix from Bell Labs in the 1970s included the source code to the system, allowing researchers at universities to modify. A larger PDP-11/70 was installed at Berkeley the following year, using money from the Ingres database project, also in 1975, Ken Thompson took a sabbatical from Bell Labs and came to Berkeley as a visiting professor. He helped to install Version 6 Unix and started working on a Pascal implementation for the system, graduate students Chuck Haley and Bill Joy improved Thompsons Pascal and implemented an improved text editor, ex. Other universities became interested in the software at Berkeley, and so in 1977 Joy started compiling the first Berkeley Software Distribution, 1BSD was an add-on to Version 6 Unix rather than a complete operating system in its own right.

Some thirty copies were sent out, some 75 copies of 2BSD were sent out by Bill Joy. 9BSD from 1983 included code from 4. 1cBSD, the most recent release,2. 11BSD, was first issued in 1992. As of 2008, maintenance updates from volunteers are still continuing, a VAX computer was installed at Berkeley in 1978, but the port of Unix to the VAX architecture, UNIX/32V, did not take advantage of the VAXs virtual memory capabilities. 3BSD was also alternatively called Virtual VAX/UNIX or VMUNIX, and BSD kernel images were normally called /vmunix until 4.

4BSD, 4BSD offered a number of enhancements over 3BSD, notably job control in the previously released csh, delivermail, reliable signals, and the Curses programming library. In a 1985 review of BSD releases, John Quarterman et al, many installations inside the Bell System ran 4. 1BSD was a response to criticisms of BSDs performance relative to the dominant VAX operating system, the 4. 1BSD kernel was systematically tuned up by Bill Joy until it could perform as well as VMS on several benchmarks. Back at Bell Labs,4. 1cBSD became the basis of the 8th Edition of Research Unix, to guide the design of 4.

The committee met from April 1981 to June 1983, apart from the Fast File System, several features from outside contributors were accepted, including disk quotas and job control. Sun Microsystems provided testing on its Motorola 68000 machines prior to release, the official 4. 2BSD release came in August 1983. On a lighter note, it marked the debut of BSDs daemon mascot in a drawing by John Lasseter that appeared on the cover of the printed manuals distributed by USENIX 9. – PowerPC is a RISC instruction set architecture created by the 1991 Apple–IBM–Motorola alliance, known as AIM. PowerPC was the cornerstone of AIMs PReP and Common Hardware Reference Platform initiatives in the 1990s and it has since become niche in personal computers, but remain popular as embedded and high-performance processors. Its use in game consoles and embedded applications provided an array of uses.

In addition, PowerPC CPUs are still used in AmigaOne and third party AmigaOS4 personal computers, the history of RISC began with IBMs 801 research project, on which John Cocke was the lead developer, where he developed the concepts of RISC in 1975–78. 801-based microprocessors were used in a number of IBM embedded products, the RT was a rapid design implementing the RISC architecture. The result was the POWER instruction set architecture, introduced with the RISC System/6000 in early 1990, the original POWER microprocessor, one of the first superscalar RISC implementations, was a high performance, multi-chip design. IBM soon realized that a microprocessor was needed in order to scale its RS/6000 line from lower-end to high-end machines. Work on a one-chip POWER microprocessor, designated the RSC began, in early 1991, IBM realized its design could potentially become a high-volume microprocessor used across the industry.

IBM approached Apple with the goal of collaborating on the development of a family of single-chip microprocessors based on the POWER architecture and this three-way collaboration became known as AIM alliance, for Apple, IBM, Motorola. In 1991, the PowerPC was just one facet of an alliance among these three companies. The PowerPC chip was one of joint ventures involving the three, in their efforts to counter the growing Microsoft-Intel dominance of personal computing. For Motorola, POWER looked like an unbelievable deal and it allowed them to sell a widely tested and powerful RISC CPU for little design cash on their own part. It also maintained ties with an important customer, Apple, and seemed to offer the possibility of adding IBM too, at this point Motorola already had its own RISC design in the form of the 88000 which was doing poorly in the market. Motorola was doing well with their 68000 family and the majority of the funding was focused on this, the 88000 effort was somewhat starved for resources. However, the 88000 was already in production, Data General was shipping 88000 machines, the 88000 had also achieved a number of embedded design wins in telecom applications.

The result of various requirements was the PowerPC specification. The differences between the earlier POWER instruction set and PowerPC is outlined in Appendix E of the manual for PowerPC ISA v.2.02, when the first PowerPC products reached the market, they were met with enthusiasm. In addition to Apple, both IBM and the Motorola Computer Group offered systems built around the processors, Microsoft released Windows NT3.51 for the architecture, which was used in Motorolas PowerPC servers, and Sun Microsystems offered a version of its Solaris OS 10.

– An operating system is system software that manages computer hardware and software resources and provides common services for computer programs. All computer programs, excluding firmware, require a system to function. Operating systems are found on many devices that contain a computer – from cellular phones, the dominant desktop operating system is Microsoft Windows with a market share of around 83. MacOS by Apple Inc. Is in place, and the varieties of Linux is in third position. Linux distributions are dominant in the server and supercomputing sectors, other specialized classes of operating systems, such as embedded and real-time systems, exist for many applications. A single-tasking system can run one program at a time.

Multi-tasking may be characterized in preemptive and co-operative types, in preemptive multitasking, the operating system slices the CPU time and dedicates a slot to each of the programs. Unix-like operating systems, e. Solaris, Linux, cooperative multitasking is achieved by relying on each process to provide time to the other processes in a defined manner.

16-bit versions of Microsoft Windows used cooperative multi-tasking, 32-bit versions of both Windows NT and Win9x, used preemptive multi-tasking. Single-user operating systems have no facilities to distinguish users, but may allow multiple programs to run in tandem, a distributed operating system manages a group of distinct computers and makes them appear to be a single computer. The development of networked computers that could be linked and communicate with each other gave rise to distributed computing, distributed computations are carried out on more than one machine. When computers in a work in cooperation, they form a distributed system. The technique is used both in virtualization and cloud computing management, and is common in large server warehouses, embedded operating systems are designed to be used in embedded computer systems. They are designed to operate on small machines like PDAs with less autonomy and they are able to operate with a limited number of resources.

They are very compact and extremely efficient by design, Windows CE and Minix 3 are some examples of embedded operating systems. A real-time operating system is a system that guarantees to process events or data by a specific moment in time. A real-time operating system may be single- or multi-tasking, but when multitasking, early computers were built to perform a series of single tasks, like a calculator.

Basic operating system features were developed in the 1950s, such as resident monitor functions that could run different programs in succession to speed up processing.